TL;DR

- Event order is critical: For many applications, the sequence of events is not just important; it’s the entire basis of their logic.

- Kafka’s guarantee: Kafka only guarantees the order of messages within a single partition.

- The solution: To ensure related events are processed sequentially, you must send them all with the same message key (e.g., a ride_id or user_id). This forces them into the same partition.

- Strictest ordering: For the strictest ordering, also set max.in.flight.requests.per.connection=1 on your producer to preventride_id retries from scrambling the sequence.

- Order is not always desirable: Forcing order when it’s not required can harm performance and scalability.

Introduction

Apache Kafka is a popular choice for building high-throughput, real-time data pipelines. For a broader, strategic view on the platform, you can read our guide to Apache Kafka for technical leaders. We stream events—user actions, sensor readings, database changes—into Kafka with the implicit trust that they’ll be processed in the order they occurred. But what if they aren’t?

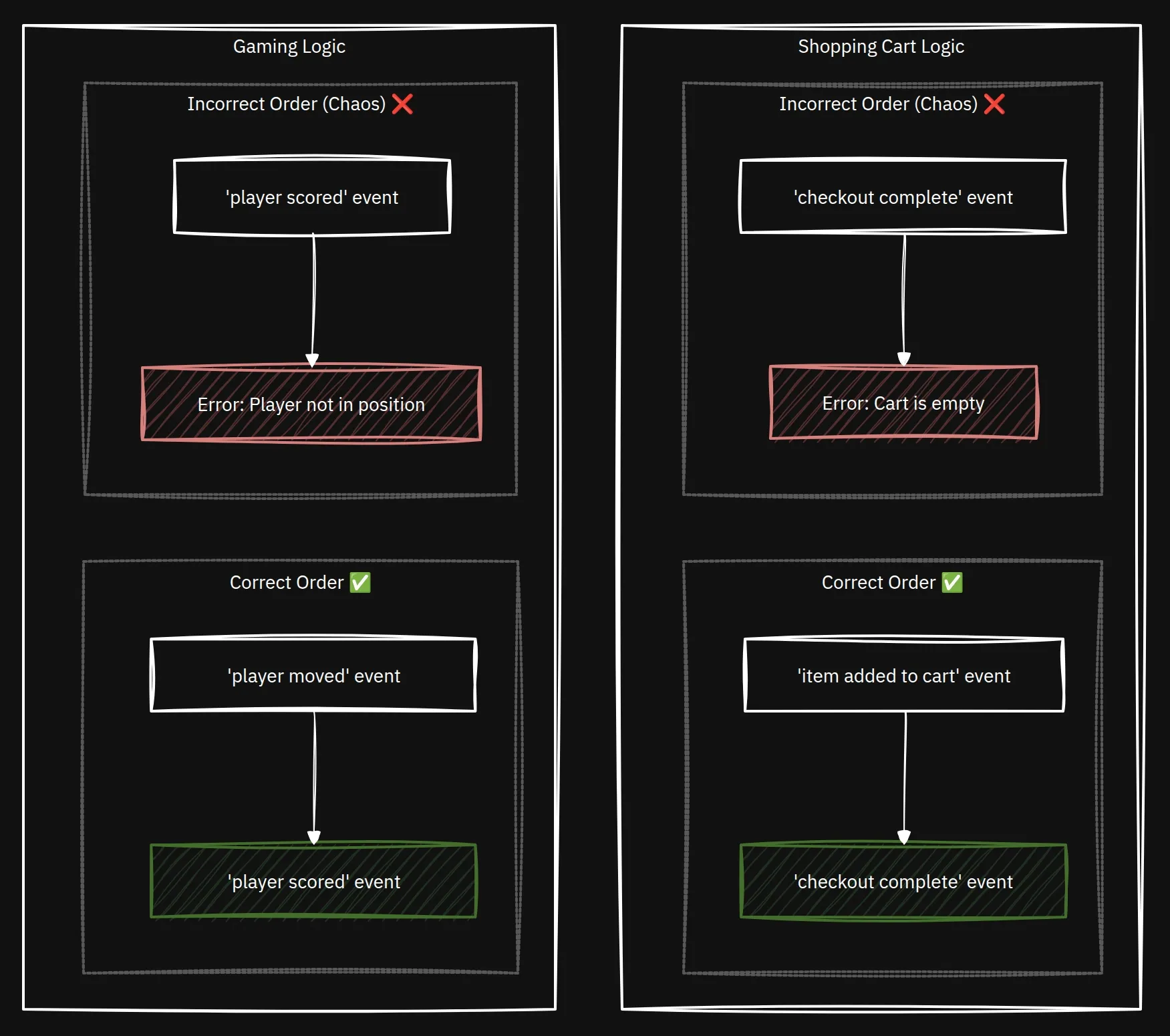

For many applications, the sequence of events is not just important; it’s the entire basis of their logic. An “item added to cart” event must come before a “checkout complete” event. A “player moved” event must come before a “player scored” event. When this sequence breaks, you don’t just get a bug; you get chaos.

Let’s explore why ordering is crucial, how Kafka guarantees it, and how easily you can break that guarantee without realizing it.

The Anatomy of a Ride-Share: An Ordering Story

Imagine a simple ride-sharing application. When you book a ride, a series of events are generated to track its lifecycle. For a single ride, say ride_id: ABC-123, the sequence is non-negotiable:

- RideRequested: The user requests a ride.

- DriverAssigned: A driver accepts the request.

- RideInProgress: The driver picks up the user and starts the trip.

- RideCompleted: The driver drops the user off at their destination.

- PaymentProcessed: The fare is calculated and charged.

If your backend systems process these events out of order, the user experience and your business logic fall apart. Imagine the PaymentProcessed event arriving before RideCompleted. Your system might try to charge the user for a trip that hasn’t finished, or the app UI could confusingly show “Ride Complete” while the user is still in the car. This is a classic case where order is everything.

Kafka’s Golden Rule of Ordering

Here is the single most important concept to understand about ordering in Kafka:

Kafka only guarantees the order of messages within a single partition.

A Kafka topic is divided into one or more partitions to allow for scalability. Think of a topic as a category for messages, like “user_clicks” or “payments.” A partition is a smaller, ordered log within that topic. By splitting a topic into multiple partitions, Kafka can distribute them across different servers in a cluster. This allows multiple consumers to read from a topic in parallel, dramatically increasing throughput and allowing the system to scale horizontally. While messages within one partition are an ordered, immutable log, there is no global ordering guarantee across all partitions of a topic.1

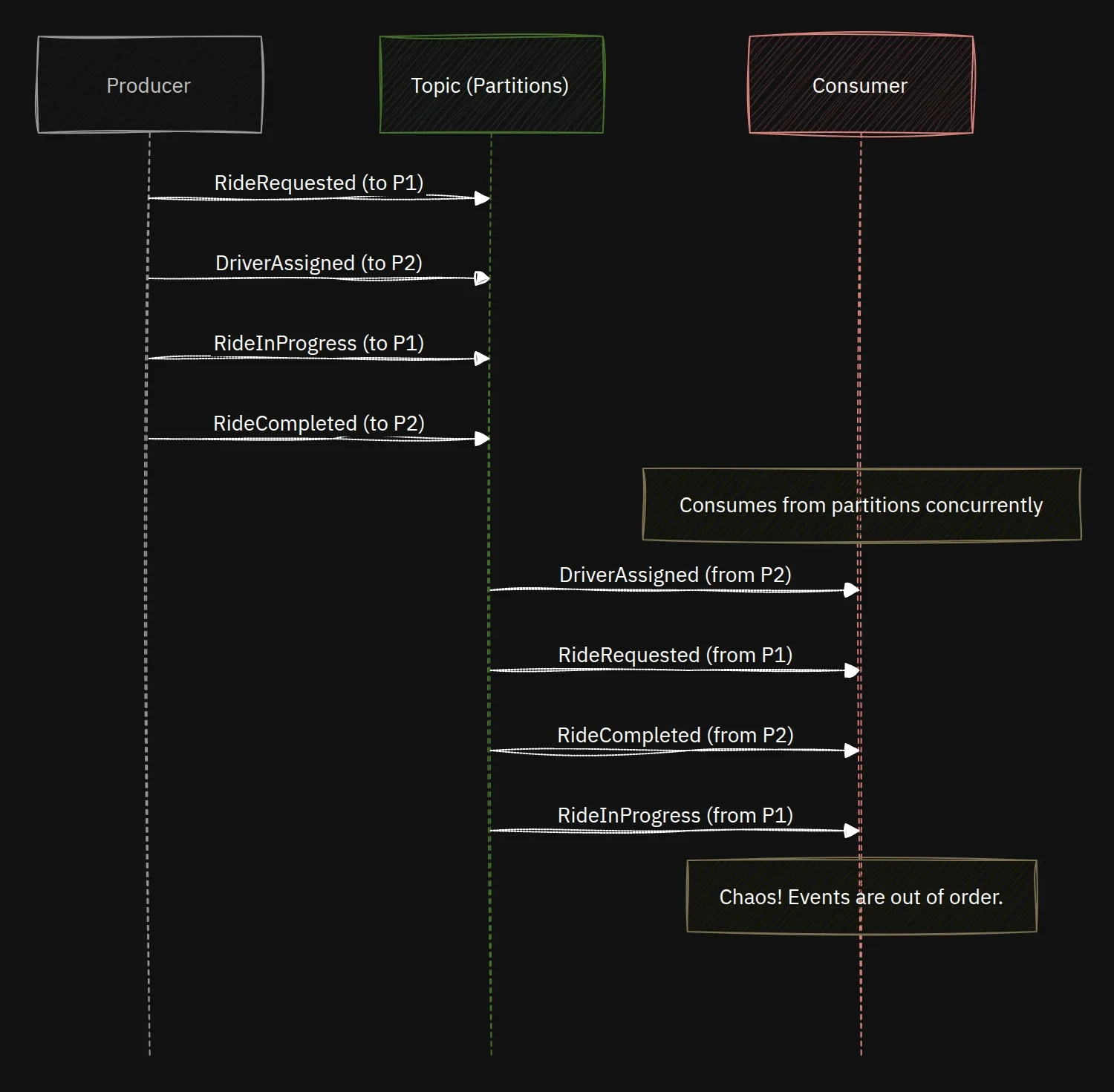

So, how do we end up with out-of-order events for our ride-share? It happens when events for the same ride end up in different partitions.

Let’s visualize this. Here, our producer sends events for ride_id: ABC-123 without a key, and they get distributed across the topic’s partitions in a round-robin fashion.

Because a consumer pulls from multiple partitions at once, network latency or processing delays can cause events from one partition to be processed after events from another, even if they were produced earlier.

The consumer, reading from multiple partitions at once, processes the events in the order they arrive from the brokers, which is now completely scrambled. The result is a broken application state.

The Solution: The Power of the Message Key

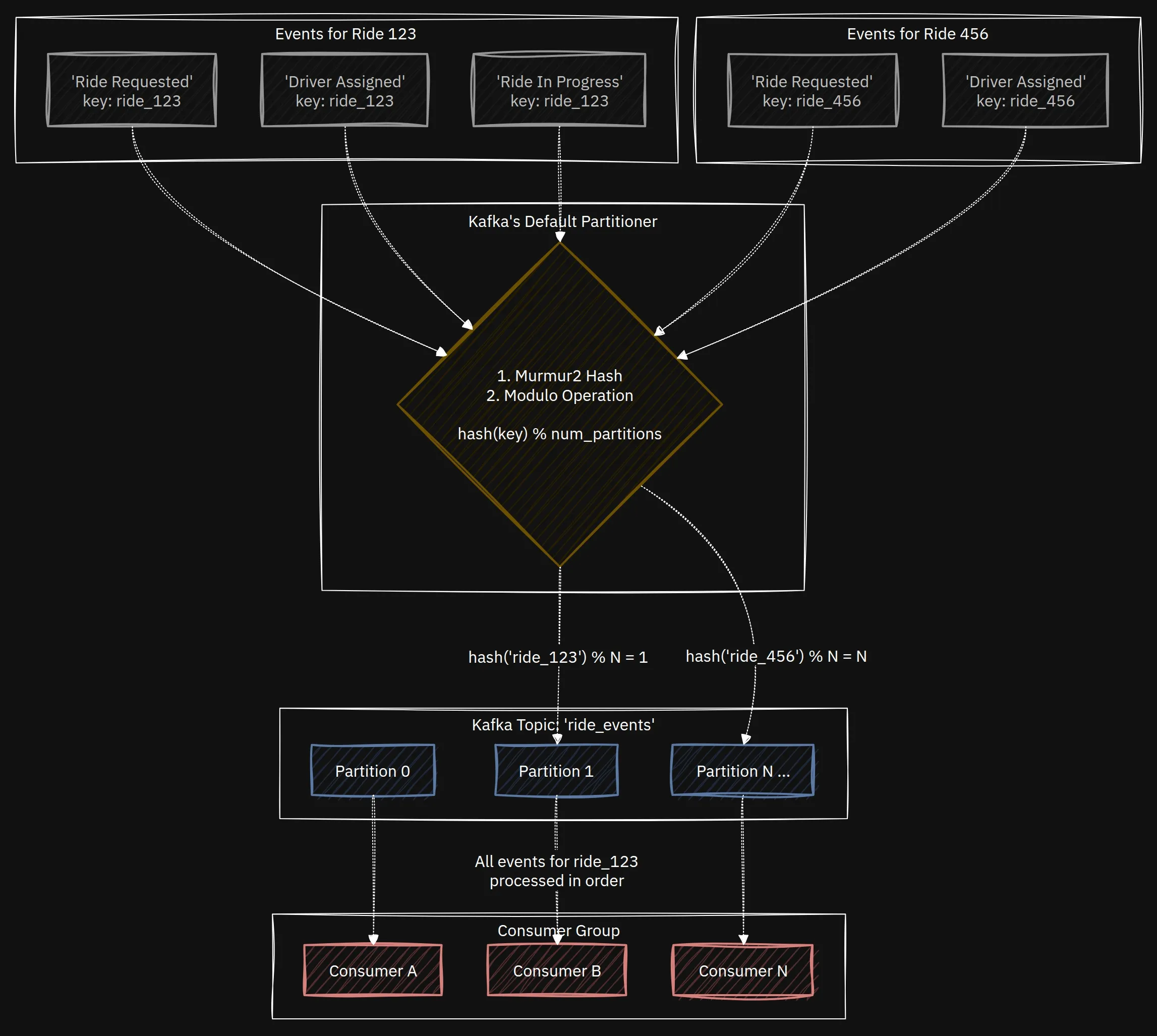

To solve this, we need to ensure that all events related to a single ride are always sent to the same partition. We achieve this by using a message key.2

When you produce a message to Kafka with a key, Kafka’s default partitioner doesn’t just distribute it randomly. Instead, it computes a hash of the key and maps it to a specific partition.

The Murmur23 algorithm ensures an even distribution of keys across partitions.

As long as you use the same key, your message will always land in the same partition.

For our ride-sharing app, the solution is simple: always use the ride_id as the message key for all events related to that ride.

This client-side logic is part of what makes Kafka so powerful. To learn more about how clients discover brokers and manage metadata, check out our deep dive: More Than an Address: Unlocking the Hidden Intelligence of Kafka Clients.

When Order Doesn’t Matter (and When It’s a Bad Thing)

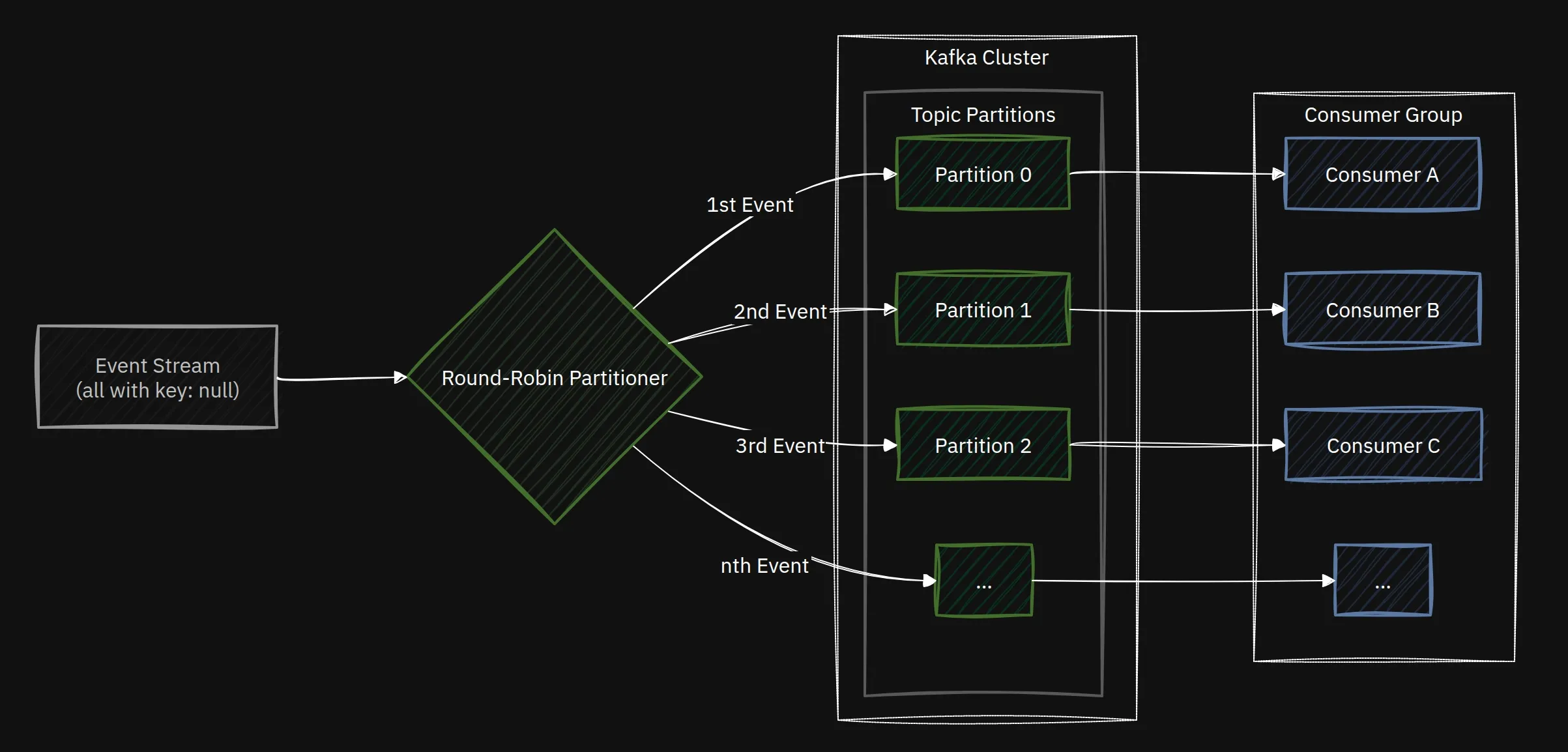

Of course, not all applications require strict ordering. Imagine you are collecting website clickstream data. Each click is an independent event, and you are likely interested in aggregate statistics, such as the number of clicks per page. In this case, forcing all clicks for a given user into a single partition could create “hot spots” and limit your throughput.

For this use case, you would produce messages with a null key. This tells Kafka to distribute the messages in a round-robin fashion across all partitions, which is exactly what you want for maximizing throughput and ensuring an even load on your brokers.

Here is a diagram illustrating this:

Here, forcing an order where it is not needed would be an anti-pattern, as it would unnecessarily limit the parallelism and scalability of your system.

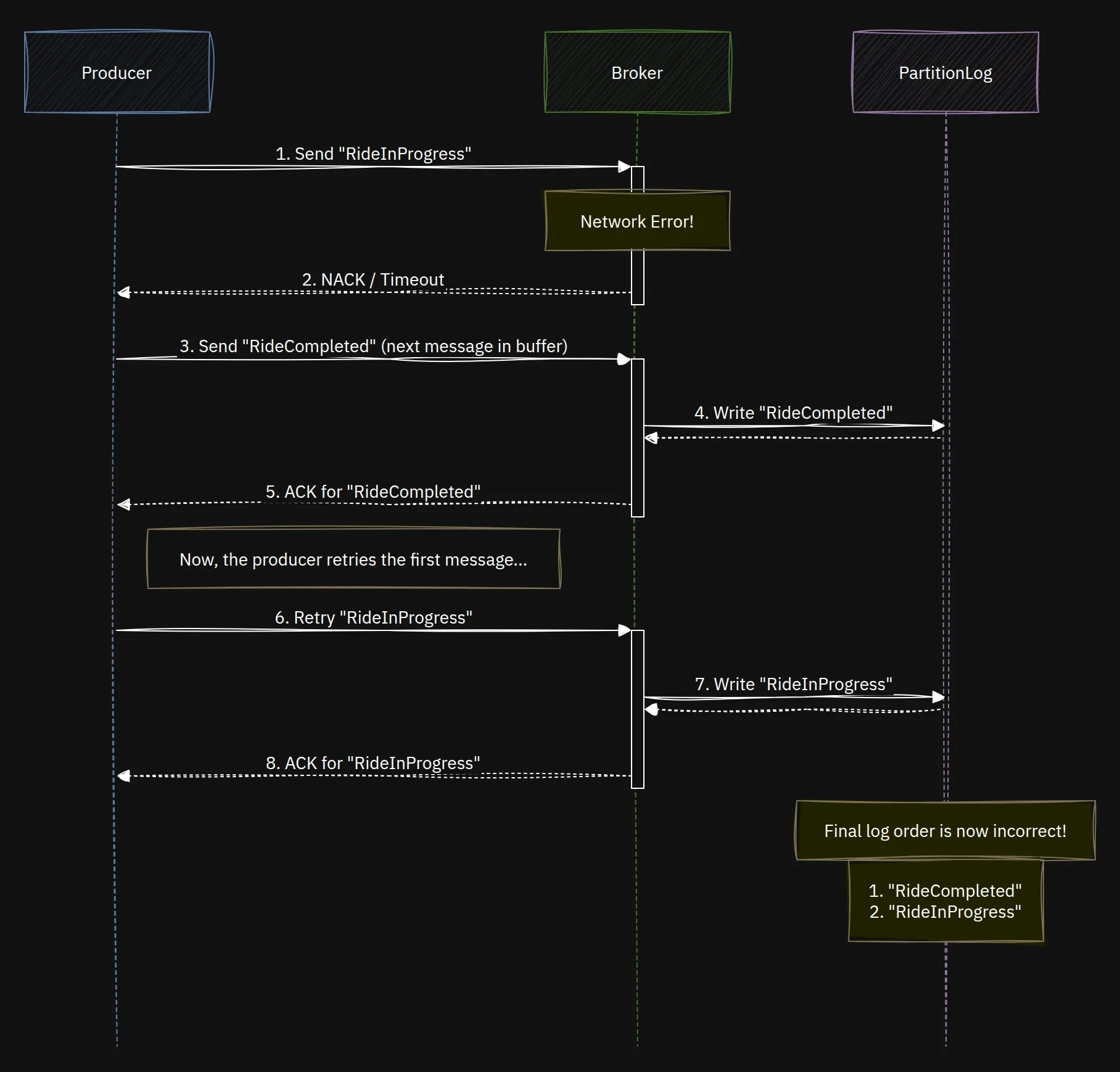

A Final “Gotcha”: Producer Retries

You’ve done everything right. You’re using the ride_id as the key. But you could still, in rare cases, see out-of-order messages. How?

Imagine this sequence:

- Producer sends

RideInProgress. The request fails with a temporary network error. - The producer is configured to retry, but before the retry happens, it sends the next message:

RideCompleted. This one succeeds. - The retry for

RideInProgressis now sent, and it also succeeds.

The order of messages in the partition is now RideCompleted, then RideInProgress. Your ordering is broken again!

For applications that demand the strictest ordering, you must configure the producer to prevent this. You do this by setting4:

max.in.flight.requests.per.connection=1

This setting ensures that the producer will only send one batch of messages at a time to a given broker and will wait for an acknowledgment before sending the next one. This prevents a later message from “overtaking” a failed-but-retrying earlier message. The trade-off is a potential reduction in throughput, so use it when ordering is a non-negotiable requirement.

Conclusion

Kafka provides a powerful foundation for building event-driven systems, but its guarantees come with responsibilities. For a vast number of applications, event order is the bedrock of correctness. By understanding that Kafka’s ordering is a per-partition guarantee, and by diligently using message keys to control data placement, you can build reliable, predictable, and scalable applications.

Always remember: if the sequence of events matters, know your key, and know your partition.

Footnotes

-

Concepts and Terms - Apache Kafka, https://kafka.apache.org/documentation/#intro_concepts_and_terms ↩

-

Kafka Message Key: A Comprehensive Guide - Confluent, accessed on October 18, 2025, https://www.confluent.io/learn/kafka-message-key/ ↩

-

MurmurHash - Wikipedia, https://en.wikipedia.org/wiki/MurmurHash ↩

-

Producer Configs - Confluent Documentation, https://docs.confluent.io/platform/current/installation/configuration/producer-configs.html#max-in-flight-requests-per-connection ↩